Previously we have mentioned a vital role of convolutional neural networks in various face recognition applications, including MDVR. To further discuss convolutional neural networks, we should first briefly discuss what a "regular" neural network actually is, and define the term convolution. Neural network’s general aim is to simulate lots of brain cells inside a computer and "train" it to recognize patterns and features, learn things and make decisions in a human-like manner, allowing it to learn similarly to a human brain. Neural networks constructed in such a way are often referred as artificial neural networks (ANNs), to distinguish them from the "real" neural networks in actual human brain. It is important to keep in mind that ANN is still fundamentally a software simulation, that includes collections of algebraic variables and mathematical equations linking them together, etc. It has nothing in common with an "imaginary actual computer" constructed by direct wiring up transistors in a densely parallel manner to make it look and operate exactly like human brain.

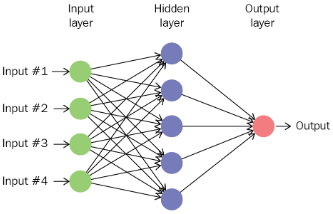

A typical neural network consists of many artificial neurons (usually a few hundreds to a few millions), called units. These units are arranged in sets of layers, each of which connects to the layers on either side. Input units receive different forms of information that neural network will try to learn about. Output units, located on the opposite side of the network, signal how it responds on the learned information. Hidden units are located in between input and output ones and form the major part of "an artificial brain". The connections between units are represented by a weight, which is either positive or negative number. The higher weight corresponds to higher influence that one unit has on the other.

There are two ways for information flow to propagate throw the network: forward network and backpropagation. First is so-called feedforward network: information goes through input units, switches the hidden unit’s layer and arrives to output. Each of the units gets information from the units on the left, and the inputs are multiplied by the weights of the connections they travel through. If the value is higher than threshold value, the unit fires, causing triggering of the units that it is connected on it’s right. In the backpropagation case, information flows from output to input. The output that the network has produced is compared with the output it meant to produce, and the difference between them is applied to modify the weights of the connections. Thereby, backpropagation helps network to learn by decreasing the difference between intended and actual output. After a given network provided with a fair amount of examples, user can provide it with new set of inputs that system is not yet familiar with, and observe how it will respond.

"Regular" Vs convolutional neural networks

Compared to "regular" neural networks, convolutional neural networks are more advantageous for image/video/face recognition applications, but have more complex architecture. Main differences are:

1) input is 3-D image, rather than single vector.

2) CNN employ convolution and based on rather different architecture.

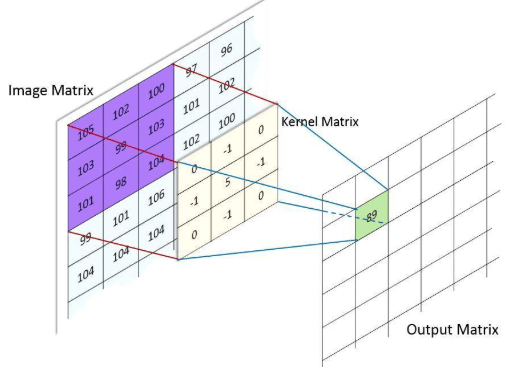

Convolution operation is a crucial part of any CNN. Generally, convolution is a mathematical operation on two functions that produces a third function, expressing how the shape of one is modified by the other. This process has some really nice but pretty complex mathematics behind, that we will not consider here.

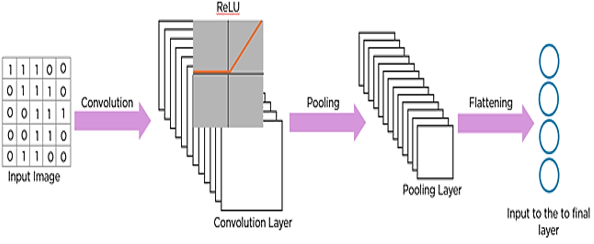

In image processing, the purpose of convolution is to locally extract object’s features. A simplified convolution routine could be described as follows: we consider an image as a matrix whose elements are numbers between 0 and 255. The size of this matrix is (image height) x (image width) x (number of image channels). Software scans a part of the image, usually with a dimension of 3x3 and multiplies it to a filter, as shown on a figure below. We multiply all highlighted image matrix elements to corresponding Kernel Matrix elements, sum up all product outputs and put the result at the same position in the output matrix as the center of kernel in image matrix. The procedure is repeated until all the image is scanned. Typically, CNN has one or more layers of convolution units.

Convolutional units help to reduce the number of units in the network, resulting in less complex model. They also consider the shared information in the small surroundings. This is especially important in many applications such as image and video processing, as the neighboring inputs such as frames or pixels, normally carry out related information.

Generally, CNN contains 4 layers: Convolution layer, ReLU layer, Pooling layer and Fully Connected Layer. Convolution layer utilizes convolution operation to produce a convolved feature map. Next layer is ReLu (Rectified Linear Unit), that contains activation function, allowing non-linearity. On practice it replaces all the negative-value pixels by "0". The purpose of pooling layers is to employ various filters to identify parts of the image like corners, edges and so on. On practice, pooling use the maximum value of the feature map to reduce the dimensionality of the input image, leading to reduction of the computational complexity, i.e reducing the spatial size of the convolved feature. Resulting pooled feature map afterwards is converted into a long continuous linear vector using a process called flattening. As a last step one needs to further build a "regular" artificial neural network.

To summarize, convolutional neural network uses a mathematical technique called convolution to extract only the most relevant pixels, that results in significant increase of image recognition efficiency. This architecture is currently a dominant one to recognize objects from a pictures or videos.

CNN-based algorithms are beneficial for Face Recognition due to their accuracy, relative robustness and ability to efficiently reduce a number of parameters in a network. According to NIST’s Patrick Grother, one of the report’s authors, the rapid advance of machine-learning tools has effectively revolutionized the industry.

References

- Machine Learning Projects for Mobile Applications, Karthikeyan, N. G., 2018.

- www.machinelearningguru.com

- https://www.nist.gov

- www.quora.com

- http://cs231n.github.io/convolutional-networks/#overview